The room had the May sun shining through the windows, the air thick with a question no one had quite dared name yet: Will AI take my job?

You smiled then, not with mockery but with memory. You'd seen it before. Good human beings, teachers worried they would be less relevant in the future—you know the answer, but need to draw this out in a way that helps the faculty learn how to get this answer themselves. Help the very humans who teach our next generation exactly how valuable they are and how much they are needed.

This blog begins with play. Not mastery. Not fear. Play.

That was the heart of my keynote at Maryville University. I stood before the faculty not as an oracle, but as a co-explorer. I said AI is a toy right now—a tool best understood by playing with it, experimenting, and learning through curiosity. It is not something to be feared, but instead adopted and shaped by domain experts to make it worthwhile. Just as reading and writing are cultural adaptations, AI is also a cultural adaptation. We were not biologically created to read; we devote years to learn it. AI, too, demands effort, but the rewards can be transformational. AI must be curated and shaped by individuals who possess knowledge of the specific patterns that are meaningful. For a university, these individuals are the faculty and teachers, who understand what young humans need to know and learn to become the next generation of nurses and occupational therapists.

For these humans - the ones in the picture above - teaching nurses in a human factors lab that looks like a hospital, having them role play setting up the tubing and masking and sanitizing and putting on gloves and ALL the things humans do - this work will not ever be “automated” away. There is so much we do as humans that must stay in the realm of what we know physically and our muscle memories. It is built through practice, and it is remembered through play. Teachers are desperately needed and wanted.

To emphasize these points, I will share two stories with you.

The first was about a question that surprised me:

"What does the Board of Trustees think the new direction for AI will be? What will be rolled out in the future?"

I answered by pointing to the audience and the people leading the charge. I named Sara Bronson, an extraordinary engineer who has streamlined the rollout of our Social Learning Companions (SLCs) from weeks to just days. Her team has received so many requests that they're now bursting at the seams with work. The excitement among the faculty piloting these companions is palpable. They're already seeing real results—nursing students staying engaged, getting support through their most difficult classes, and ultimately better prepared for the hands-on, high-stakes work they do in Maryville's human factors labs.

These AI systems weren't just theoretical—they were tangible. A showcase of this work was scheduled right after my talk, allowing every faculty member to see it in action.

And none of this happened in isolation. It was made possible through the visionary leadership of my friend Phil Komarny, who brought me into this work and created space for faculty leaders like Michael Palmer to embed AI into Maryville's culture in a way that aligns with the university's core values. We're talking about explainable AI. Systems that respect institutional knowledge, protect intellectual property, and run securely behind the university's firewall.

Phil is also the mind behind one of the boldest moves in Maryville's digital transformation—dismantling and replacing the traditional IT structure with a collective. This is a new model that shares accountability for data across business and technical teams. The goal isn't just the adoption of new tools but integration that augments human capabilities. It's not about adding more technology. It's about building the future together.

But then I let them in on something more human: the presence of nuns on the board who regularly ask me a more profound question.

How do we know we are making good humans?

Not just competent nurses who can use AI, but compassionate, thoughtful people. That simple question cut through the abstraction. It revealed the politics, the values, and the real stakes. It allowed me to bridge a divide between the board and the faculty, between oversight and mission.

This moment helped frame the purpose of AI not merely as a technological upgrade but as a moral and pedagogical opportunity. We, the faculty, are not being asked to keep up. We are being asked to lead and model responsible, joyful engagement with the tools our students will live with and within. AI is meant to augment humans, augmenting students so they can focus on the human work, not the examinations and paperwork.

The purpose of AI is to deliver higher-quality outcomes faster than humanly possible.

The second story came from another keynote, and another recurring question: Will there be faculty in a future where everyone has their own team of assistants?

I smile widely because I know the audience is ready to hear common-sense logic and find their own way to answer this question. This question taps into the core fear that everyone shares and resonates with the worry that all humans have.

Will AI take my job, compromise my safety, affect my ability to provide for my family, and hinder my need to help the next generation?

This is not a naive concern or set of questions, but it is missing something fundamental. Most people assume that all students use AI the same way others do, and it tells me who has not watched many students use AI. All humans have an egocentric bias, sometimes referred to as the curse of knowledge, where once someone knows something, they struggle to imagine not knowing it. This is particularly common among experts, such as faculty members, when trying to empathize with students. But anyone who has watched a student interact with AI knows that is far from the truth. The question isn't about whether AI can write lesson plans, generate content, or even simulate a teacher. Of course, it can. But that is not what makes a student. Or a teacher.

I tell the story of a student, bright-eyed, bubbling, interrupting one of my keynotes, not with a critique, but with curiosity, wants me to ask the AI what is most on their mind:

"How do I get rich without working hard?"

You told your audience that was not the voice of AI. That was the voice of someone trying to understand what AI was for.

I pause and stare at the audience. I need it to sink in.

I knew what the question revealed—that students, full of potential, are not yet fully formed. They lack the lived experience to wield AI wisely. AI is exceptional when you are already wise. It sharpens thought, organizes plans, and amplifies voice. But it cannot teach discernment on its own. That's where faculty come in.

Please take a deep breath, hold it, and exhale slowly with me. Here is the wisdom you can find out for yourself.

Younger humans haven't learned that they have to work to achieve success. Try it. Ask your students to show you how they use AI, and ask your "collective" team to show you what questions students are asking AI in the log files. See what they are using AI for, learn from them, and in doing so, you will have your fears allayed.

We all smile now because we know students still need us to deliver and curate the specific content and create tension. Tension, though uncomfortable, is the birthplace of learning. Ironically, it's the same tension that many faculty fear when confronting AI: the discomfort of not knowing, being challenged, and evolving. But that discomfort is not a threat. It's an invitation. All they need to do is watch others use it.

Here is a brilliant quote from Admiral Grace Hopper.

"I've always loved working with young people. They ask questions I wouldn't think of asking, and they haven't been around long enough to be told what can't be done."

I reminded the audience that students are not looking for perfect information; they are looking for meaning, strategy, and values. They want to belong and be valued.

Machines that sense patterns from large volumes of data can’t tell which ones are important. This is why we will always need humans to curate the right patterns—the specific ones that are so important and meaningful to us, personally. This is the promise of AI: hyper-personalization, and it cannot be achieved without understanding how the AI works. It has to be an AI that can demonstrate its work in explaining why it made the choices it made.

This is why our roles as educators remain essential: not to control knowledge but to shape the lens through which knowledge is interpreted, not to resist AI but to help students ask better questions. To illustrate this, I gave them another exercise they can do on their own. Using my bookshelf - something I have been wanting to do for years.

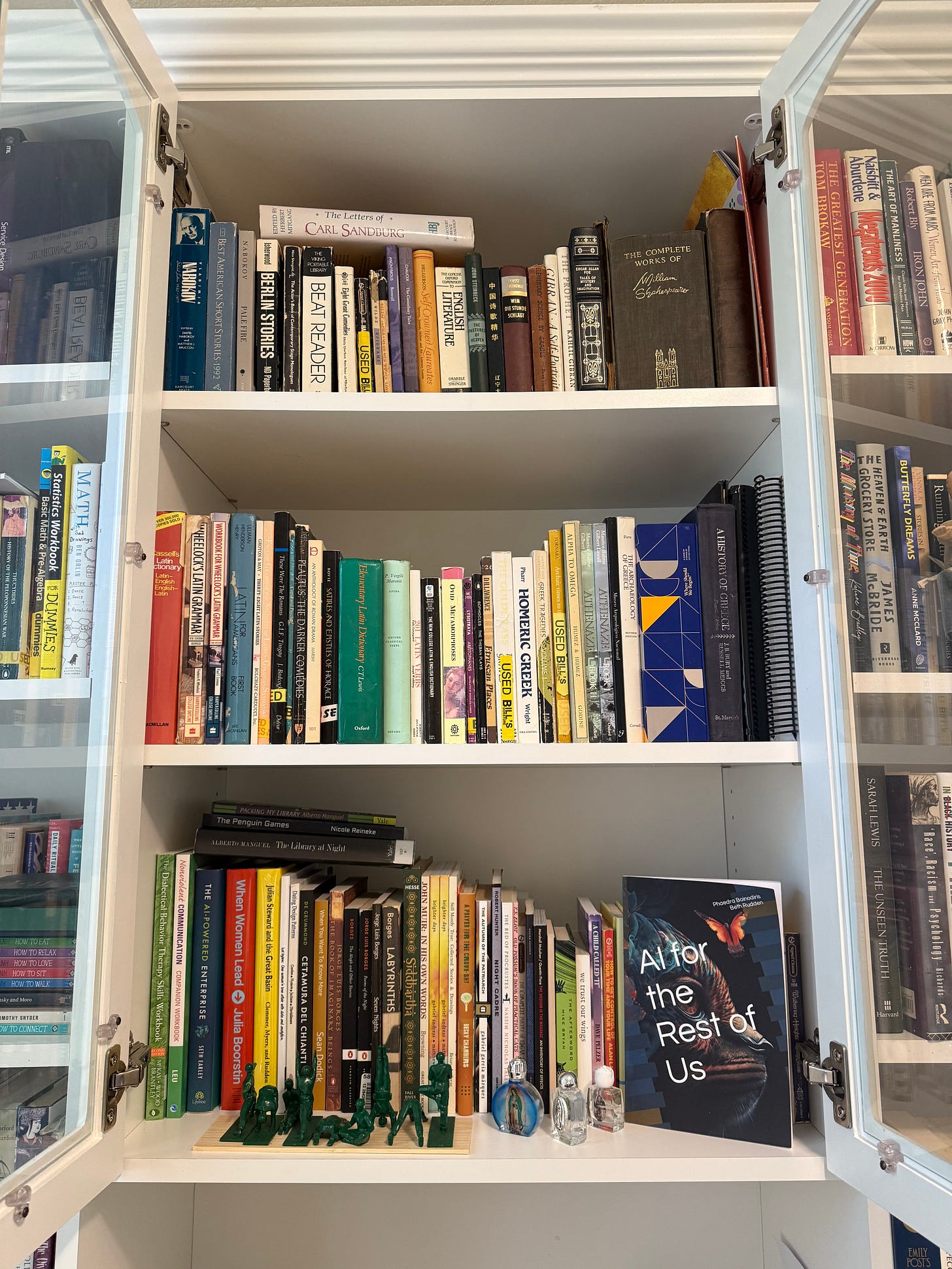

I showed them how I took a picture of my “special” bookshelf and asked AI to select which books I would curate into personas based on what it knows about me. I wanted AI to analyze the titles to deduce what I know, what I care about, and what patterns I follow. It could read the titles and authors, and yet, it chose all the wrong books - it chose letters from Carl Sandberg, which is only on my shelf because of “foggy cat feet,” and then a fiction novel that was there because my friend wrote it. It completely ignored my own book “AI for the Rest of Us”, as well as my favorite authors, such as Shakespeare, Aristophanes, and Jorge Luis Borges, and the AI could never understand why a workbook on dialectical behavioral skills was in my “special” collection. It will never understand the nuances of a human keeping a filled-out workbook on a shelf behind the glass unless a human teaches it that pattern is vital to their data privacy.

This illustrates a key distinction: machines are brilliant at pattern recognition, but only humans can decide which patterns matter.

So, let this be our guiding metaphor. We are not just curators of content or conveyors of skill. We are pattern-makers, meaning-makers.

That is why AI is not a threat. It is a mirror, a companion, and a toy—a new way to peer into what makes us human and what makes students learn differently. My good friend Adam Culter says:

AI is the electron microscope for the human condition.

To my colleagues at Maryville and beyond: let your students see how you use AI. Let them demonstrate how they utilize AI. Teach each other. Play. At Maryville, the students are the shining stars; this university has its priorities straight.

As a postscript and a way to pay tribute to the leader I watched maneuver through his 64th board meeting, Mark Lombardi.

I wouldn't even be in St. Louis or on the Board of Trustees were it not for the visionary leadership of Dr. Mark Lombardi. For 17 years, he has led Maryville with a fierce commitment to innovation and student-centered learning. His book Disruption outlines a roadmap for how higher education can evolve rather than retreat in the face of change. Under his leadership, Maryville hasn't just weathered disruption—it has harnessed it. The university stands as a beacon of what's possible when courage, clarity, and care guide institutional transformation. That foundation, built over years of steady, values-driven leadership, is why I knew this school could lead the world in rethinking what it means to teach, to learn, and to be human in the age of AI.

Acknowledgments and References:

Mark Lombardi — President of Maryville University. His book Disruption and 17 years of leadership have set the foundation for this moment in higher education.

Jennifer Yukna — Director of Academic Records and Policy at Maryville University. For her thoughtful leadership on the Student and Learning Outcomes Committee, which has given me a profound window into how Maryville shapes its nursing programs and measures what truly matters: student growth, readiness, and humane excellence. LinkedIn

Phil Komarny — For visionary leadership and the creation of the Collective at Maryville. Medium

Mykale Elbe — A true force in teaching and learning. Mykale’s commitment to innovation helped drive the integration of Social Learning Companions into student experiences, enabling learners to offload what machines do well so they can focus on the deeply human aspects of care and connection. LinkedIn

Jesse Kavadlo — For getting me on stage with the faculty and championing interdisciplinary innovation. LinkedIn

Gifty Blankson-Codjoe, Ph.D. — For being the best host and ensuring every detail was thoughtful and welcoming. LinkedIn

Michael Palmer, Sara Bronson, and team — For the extraordinary work reducing attrition through Social Learning Companions. LinkedIn Post

Kathy Quinn, Ed.D. — For holding the heart of Maryville and being my tour guide, with 35 years of service dedicated to student success, she exemplifies what it means to lead with care and continuity. LinkedIn

Bob Cunningham - For an amazing ad hoc tour of the teaching labs. LinkedIn

Erin Schnabel — For the wisdom quote: "AI is great if you're already wise."

Everyday Ethics for AI — A guiding framework from IBM. IBM Resource

Adam Cutler — For human-centered design of AI experiences. TED Talk

The Primer MK (Experimental) — Adaptive AI journey created by Adam Cutler. Custom GPT

GitHub Repository — Advanced prompting and custom instruction resources. GitHub

ChatGPT, Claude, You.com, Midjourney — For AI enrichment, conversational exploration, and visual storytelling.