The theme is metamorphosis and the butterfly above is a midjourney creation I made almost 2 years ago. Transformation isn’t just a technological shift—it’s a return. A metamorphosis back to what makes life rich and meaningful. I use AI not to distance myself from being human, but to reclaim it. To make space for what truly matters: making deep connections, spending time with the people I love, and feeling the sun on my face. In a world that glorifies busyness and optimization, this is the transformation I choose—to shift our norms away from constant acceleration and toward conscious presence. AI, used intentionally, can be the tool that gets us there. Here are some questions to start pondering how we can all make this shift together.

"How do I get more people to understand the limitations of the current AI systems ? How do I ask incredibly busy, hard working people to use something that “simulates” efficiency? How can I get folks to become wise enough to use the tools - so we can get back to what matters ?"

This is a blog that will accompany by my Keynote in Toronto on May 6th, 2025. I created this blog from a transcript of one of the rehearsals I recorded (above) and I am constantly astounded at how much I can put forth, AND how much it takes me to rework things because I fall into the trap of the easy button. AI can do so many things that it couldnt do even 3 months ago, but just because something can do something doesnt meant something should. When AI just provides the words that sound good, but then you go back and use them - their meaninglessness is deafening. I start with an “amuse-bouche” or palate cleanser to orient my audience and myself using the Chasm reference from Geoffrey Moore.

The chasm we're crossing isn't technological — it's cognitive

When Geoffrey Moore wrote about "crossing the chasm" in 1991, he was focused on the gap between early adopters and mainstream markets. But the chasm we face with AI today isn't about adoption rates or market penetration. It's about something far more fundamental: being human in an age of predictive machines.

AI isn't just crossing markets anymore—it's crossing minds. And the real chasm we're navigating isn't technological; it's cognitive. It's about culture. It's about meaning.

I started my career quite literally digging in the dirt, tracing human lives through the artifacts we built and left behind. From archaeology to anthropology, programming, and AI, this journey taught me that data isn't abstract. It's an artifact of human experience. People speak through data; data doesn't speak for itself.

No one is behind

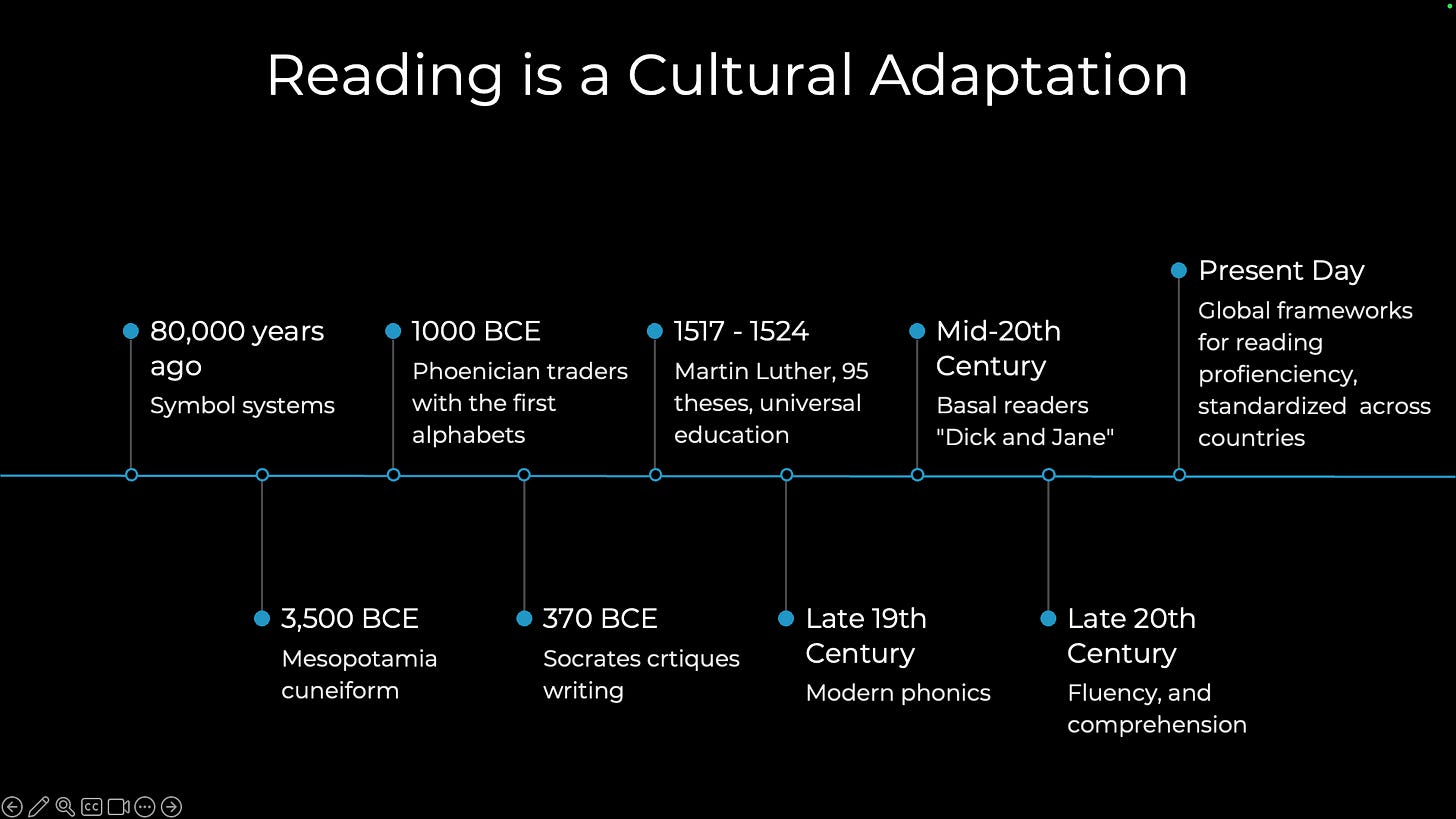

If we think about AI literacy in the same way we think about reading literacy, we have a long way to go as a human race. Consider that we are not biologically evolved to read—we've had to learn to read, and this learning requires friction, tension, and time. Similarly, we are not biologically evolved to know how to use AI in a way that augments humans rather than replaces them—this too requires intentional learning and cultural adaptation. There is a 80K year timeline below that showcases the journey it has taken humans to get to global frameworks for reading proficiency.

In developed countries, we spend 6-7 years of every human's life teaching them how to read.

Take a deep breath with me.

And let it out.

Rest in the knowledge that you have time to enter this AI revolution. You are needed. You are wanted. There is so much work to be done, and the rewards for engaging with this work are potentially transformative beyond what we can imagine today.

Three invitations

I want to extend three invitations as we navigate this transformation together:

1. Share your expertise

If we want AI for everyone, all humans need to share what they know, not just the small few who are building the models. Specifically, I want people to share their story, what makes them special, and how they view the world - how are you using AI?

Domain expertise is the missing link in AI. Domain experts (nurses, engineers, construction workers, plumbers, policymakers, teachers) provide the context models needed.

Without context, models fail to be anything but a toy—or a noose.

88% of AI pilots never reach production. Why? Because they optimize for accuracy, not application. The model doesn't know what matters. It can sense a pattern but doesn't know which patterns matter.

Patterns do not equate with meaning. Only domain experts can tell us which patterns matter because they have the relationship with the information. Data scientists and software engineers will never grasp what is needed unless it is within our domain of knowledge, we dont have the right relationship to the information. We dont have the knowledge.

2. Humans already have magic

Arthur C. Clarke wisely wrote, "Any sufficiently advanced technology is indistinguishable from magic." But here's the thing: we are the magicians. We create the data, the systems that make the data, the machines, the technology.

One piece of magic we can't replicate is true randomness. Humans are pattern-making machines, and when we try to simulate randomness, we make patterns instead. This is why encryption, blockchain, and lotteries rely on intense safeguards—we built extreme systems to achieve randomness.

Humans excel at recognizing what's special. We spot the drop in the water that changes everything and see the nuance, context, and meaning where machines only see probability.

Humans are exquisitely good at specificity. This is the essence of magic: knowing the difference between a man with a bullet belt across his chest and a gentle older man who stops by the afternoon youth group to teach chess, never raising his voice or threatening violence.

That’s the power of the particular: it overrides abstraction. While machines crunch aggregates, humans live in exceptions. We don’t just see data; we feel its edges. We know when a pattern is a rule and when it’s a rupture. This is why so-called “edge cases” aren’t fringe—they are the heart of meaning-making. And this is where brute statistics fail. Because the essence of magic isn’t in counting what happens most; it’s in recognizing what matters most, and when. Machines can model trends, but they can’t hold reverence for the moment that breaks the trend and changes a life.

Data without context is just noise. It’s the difference between a riddle and an answer. Take the Riddle of the Sphinx: What walks on four legs in the morning, two at noon, and three in the evening?

The answer—a human—only makes sense if you understand the metaphor of a life’s arc: crawling as a baby, walking as an adult, using a cane in old age. Without that narrative context, it’s just a strange math problem. Our ability to make meaning from data depends on knowing when, where, and for whom it was generated. When data is stripped from its context, we lose the thread that makes it intelligible, let alone actionable.

That’s what we do at Bast AI, we preserve the story around the signal—because in human terms, understanding why and when is often more important than simply knowing what.

3. Augmented inference (thank you Dave Snowden)

Inference comes from the Latin "inferre," meaning "to bear or carry into." What do we carry into our thinking? Our creations? Our language?

Humans are natural inference engines. We extract meaning from one context and apply it to another. Knowing what is meaningful is our superpower, but it can reveal the source of our bias.

The Cognitive Bias Codex maps the shortcuts our brains have evolved to help us survive—fast judgments, snap decisions, and heuristics tuned for uncertainty. These biases weren’t flaws; they were features that kept us alive when the world was unpredictable and the stakes were life or death. But today, many of those reflexes fire in systems we no longer fully understand—digital networks, global media, automated decisions. Now is the time to ask: do these shortcuts still serve us? Just because a bias was adaptive once doesn’t mean it belongs in our governance, hiring, healthcare, or data systems. If AI is going to reflect us, then we must reflect on ourselves.

Understanding our own cognitive biases isn’t just an intellectual exercise—it’s a moral one. It’s how we move from survival to stewardship.

What if augmented inference is our version of phonics—a way to carry human experience to AI behavior? A mirror reflecting the biases we have? A bridge between bias and awareness?

Augmented inference isn't about outsourcing our thinking but extending it across scale, speed, and surprise. When done well, it helps us:

Process information at scales no human could handle

Spot patterns we wouldn't naturally choose or notice

Connect ideas across distant domains of knowledge

See differently, offering perspectives we hadn't considered

Test hypotheses across thousands of scenarios

The promise of AI isn't about automation. It's about accelerating human understanding.

Stories from 2035

Imagine a future where AI helps us understand ourselves better:

The universal translator of thought: Where a visual-spatial learner struggling with physics and a sequential-processing surgeon both receive information in their cognitive "native language."

The gardens of digital creation: Where creators and individuals maintain dignity and agency over their data and creative works in a mutually beneficial ecosystem

A human-centered future: Where technology finally adapts to being human instead of humans adapting to technology

These aren't just aspirational fiction—they're portals that ask: What if we design technology from a deeper understanding of ourselves?

The light inside

Pablo Neruda asked, "Is four the same four for everyone?" It's not just a poetic riddle—it's a moral question, an epistemological question, a question about power and the quiet codes written into our systems.

When we build artificial intelligence, whose light are we modeling? Whose vision are we encoding?

Because four is not the same four when you've been excluded from the equation, and seven doesn't feel like seven when the system wasn't built for you to win.

The real future of AI isn't about speed—it's about symbiosis. To move from pilots to platforms, we need collaborations across disciplines. Engineers, artists, caregivers, historians, plumbers, construction workers—we need each other.

Don't let AI define your expertise. Bring your expertise to define AI.

We are not merely users of technology but gatherers of light. In our hands, we hold tools and the power to reconnect what was shattered: our wisdom, stories, and shared humanity.

This blog accompanies my keynote presentation. Join me this week as we explore the metamorphosis that happens when we use technology to catalyze our transformation back to the essence of what makes life rich and meaningful: our humanity, our embodied experiences, and our lives.

Notes on how this was built

This keynote was a collaborative act—between me, my lived experience, and a suite of AI tools designed to help me think, visualize, write, and structure ideas:

ChatGPT-4o – for outlining, drafting, and inference validation

Claude 3.7 – for summarizing complexity and conversational tone

You.com / DeepSeek – for domain-specific search and grounded citations

Beautiful.ai – for slide creation

Midjourney – for visuals that sparked metaphor and emotion

We should be transparent about the tools we use. Like any artifact, they carry assumptions, limitations, and affordances. But they can also enhance our imagination.

Bibliography

Moore, Geoffrey A. Crossing the Chasm. HarperBusiness, 1991.

Neruda, Pablo. The Book of Questions. Copper Canyon Press, 2001.

Wolf, Maryanne. Proust and the Squid. Harper, 2007.

The Open Group. Data Scientist Profession Framework, 2021.

IBM Design. Everyday Ethics for AI. https://www.ibm.com/design/ai/ethics/everyday-ethics

Tippett, Krista. "The Wisdom of Pulling Back from the Brink." On Being, 2023.

Munroe, Randall. "XKCD #1838: Machine Learning." XKCD, 2017.

Radiolab. "Stochasticity." WNYC Studios, 2009.

Sinclair, Upton. I, Candidate for Governor. UC Press, 1994.

Benson, Buster. "The Cognitive Bias Codex." Better Humans, 2016.

IBM Academy of Technology. "Titanic Project." Internal case study, 2020.

Mead, Margaret. Often attributed quote on social change.

Duckworth, Sylvia. "Wheel of Power/Privilege." https://sylviaduckworth.com

Sophocles (via Odysseus). The Riddle of the Sphinx.

Snowden, David. Cynefin Framework. Cognitive Edge.

WhatIfpedia. "Ontology-based Information Reception Model."

As a community college professor, I wonder how I get my students “here?” Baseline reading of “anything” has become a chore. The tools at hand for them have removed a sense of core concepts of just thinking/reading. Ideas on how to get them reading for love & life…I haven’t figured it out. I will take any advice offered.